A couple of weeks ago I tweeted a projection that the $/GB for flash drives will meet the $/GB for hard drives within 3-4 years. It was more of a feeling based upon current pricing with Moore’s Law applied than a well researched statement, but it felt about right. I’ve since been thinking some more about this within the context of current storage industry offerings from the likes of EMC, Netapp and Oracle, wondering what this might mean.

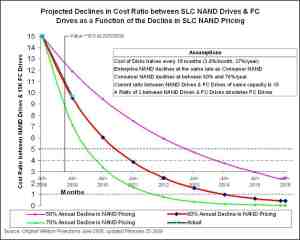

First of all I did a bit of research – if my 3-4 years guess-timate was out by an order of magnitude then there is not much point in writing this article (yet). I wanted to find out what the actual trends in flash memory pricing look like and how these might project over time, and I came across the following article: Enterprise Flash Drive Cost and Technology Projections. Though this article is now over a year old, it shows the following chart which illustrates the effect of the observed 60% year on year decline in flash memory pricing:

This 60% annual drop in costs is actually an accelerated version of Moore’s Law, and does not take into account any radical technology advances that may happen within the period. This drop in costs is probably driven in the most part by the consumer thirst for flash technology in iPods and so forth, but naturally ripples back up into the enterprise space in the same way that Intel and AMD’s processor technologies do.

So let’s just assume that my guess-timate and the above chart are correct (they almost precisely agree) – what does that mean for storage products moving forward?

Looking at recent applications of flash technology, we see that EMC were the first off the blocks by offering the option of relatively large flash drives as drop-in replacements for their hard drives in their storage arrays. Netapp took a different approach of putting the flash memory in front of the drives as another caching layer in the stack. Oracle have various options in their (formerly Sun) product line and a formalised mechanism for using flash technology built into the 11g database software and into the Exadata v2 storage platform. Various vendors offer internal flash drives that look like hard drives to the operating system (whether connected by traditional storage interconnects such as SATA or by PCI Express). If we assume that the cost of flash technology becomes equivalent to hard drive storage in the next three years, I believe all these technologies will quickly become the wrong way to deploy flash technology, and only one (Oracle) has an architecture which lends itself to the most appropriate future model (IMHO).

Let’s flip back to reality and look at how storage is used and where flash technology disrupts that when it is cheap enough to do so.

First, location of data: local or networked in some way? I don’t believe that flash technology disrupts this decision at all. Data will still need to be local in certain cases and networked via some high-speed technology in others, in much the same way as it is today. I believe that the networking technology will need to change for flash technology, but more on that later.

Next, the memory hierarchy: Where does current storage sit in the memory hierarchy? Well, of course, it is at the bottom of the pile, just above tape and other backup technologies if you include those. This is the crucial area where flash technology disrupts all current thinking – the final resting place for data is now close or equal to DRAM memory speeds. One disruptive implication of this is that storage interconnects (such as Fibre Channel, Ethernet, SAS and SATA) are now a latency and bandwidth bottleneck. The other, potentially huge, disruption is what happens to the software architecture when this bottleneck is removed.

Next, capacity: How does the flash capacity sit with hard drive capacity? Well that’s kind of the point of this posting… it’s currently quite a way behind, but my prediction is that they will be equal by 2013/2014. Importantly though, they will then start to accelerate away from hard drives. Given the exponential growth of data volumes, perhaps only semiconductor based storage can keep up with the demand?

Next, IOPs: This is the hugely disruptive part of flash technology, and is a direct result of a dramatically lowered latency (access time) when compared to hard disk technology. Not only is the latency lowered, but semiconductor-based storage is more or less contention-free given the absence of serialised moving parts such as a disk head. Think about it – the service time for a given hard drive I/O is directly by the preceding I/O and where the head was left on the platter. With solid-state storage this does not occur and service times are more uniform (though writes are consistently slower than reads).

These disruptions mean that the current architectures of storage systems are not making the most of semiconductor-based storage. Hey, why do I keep calling it “semiconductor-based storage” instead of SSD or flash? The reason is that the technologies used in this area are changing frequently, from DRAM-based systems to NOR-based flash to NAND based flash to DRAM-fronted flash; Single-level cells to Multi-level cells; battery-backed to “Super Cap” backed. Flash, as we know it today, could be outdated as a technology in the near future, but “semiconductor-based” storage is the future regardless.

I think that we now need technologies that look more like Oracle Exadata v2, with low-latency RDMA interfaces directly into the Operating System/Database. However, they need to easily and natively support other types of storage (unstructured data such as files, VMware datastores and so forth). The Exadata architecture lends itself well to changes in this area in both hardware trends and access protocols.

Perhaps more importantly, we are also only just beginning to understand the implications in software architecture for the disrupted memory hierarchy. We simply cannot continue to treat semiconductor-based storage as “fast disk” and need to start thinking, literally, outside the box.

Recent Comments